As an Office 365 admin, should you dedupe files on your SharePoint sites? In the normal case, I think the answer is no. As a general rule, it is cheaper to simply buy more capacity than it is to spend the time rationalizing through other people’s data. But, what if you’ve got a site that you know to be a mess, or maybe you just want to be tidy with your own OneDrive folder?

The second case is why I whipped this up. Over the years, I’ve worked on files that I’ve renamed or or copied into different folder structures, making a mess for myself. I wanted to dedupe them (using PowerShell of course!) but I’m using OneDrive’s Known Folder Move, which means most of the contents in Documents, Pictures, and Desktop are not sitting on my hard drive until I access them.

Let’s quickly talk about how you’d normally dedupe content at the file level, which is different than techniques that dedupe content at the storage (e.g. block) level, which we wouldn’t have access to anyway.

- Define the scope of your work. I suggest starting with just the contents of one directory, like your Documents folder. Besides, it would likely cause your users confusion to remove a file from SiteA because you found a copy of the file in SiteB.

- Normally this is where you start grouping files by size (called length in PowerShell). Two files cannot be identical if they are different sizes, so it would be a waste of time to hash them. However, in the case of SharePoint Online (including OneDrive), Microsoft has already hashed each file, so we can skip this step.

- Note: Multiple files with the same name are not necessarily duplicates, so we won’t be evaluating names.

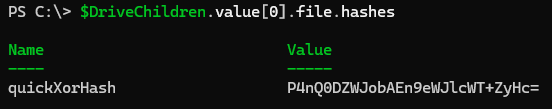

- Because Microsoft has already populated the

quickXorHashproperty, we do not need to compute the hash of the files ourselves.- As mentioned in the remarks section on the previous link, this attribute is not available in all cases, however I’ve found it to be present in OneDrive for Business and SharePoint Online. We should err on the side of caution anyway, so we’ll just skip files without this attribute.

- Group files by the

quickXorHashproperty, and report any group that has more than 1 member (where two or more files are the same). - Generate a report.

The next step would be to decide which instances of a file you want, and then delete the others, but there is a lot to think about before you take that leap. Too much for this article, which will leave you with just the report. Once you’ve figured out which files to remove, it would be simple enough to send the results to Remove-MgDriveItem.

I use the Microsoft Graph PowerShell SDK’s Invoke-MgGraphRequest for all of the API work. If you are just interacting with your own files, you can jump right in. If you want to work on files on other sites, such as the OneDrive of another user account, you’ll want to register a app in your tenant with the Files.ReadWrite permission.

Quick rant: Why use Invoke-MgGraphRequest instead of the Get-MgDrive* cmdlets?

- The various mg-whatever cmdlets have inconsistent levels of quality. Microsoft has said that these are machine-generated, and in this author’s opinion, that’s why they often don’t work correctly. I’ve felt this way for a few years now, so maybe the quality has improved.

- The cmdlets don’t expose all of the functionality that may be available in the underlying API, or constrain the use of some functionality.

- They obfuscate what’s going on behind the scenes (though I love the

-debugparameter). You learn a lot more about how M365 works by learning the real API. - Often a cmdlet is more complicated than just using the API directly.

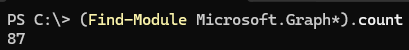

- There are so many modules to install!

Quick caveat: So why use Invoke-MgGraphRequest instead of simply Invoke-RestMethod or Invoke-WebRequest like a true purist?

- It does a nice job of handling authentication in the background, so I don’t have to keep track of tokens.

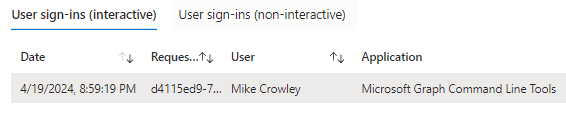

- The app is already registered by Microsoft:

Back to the dedupe report…

We’ll create a folder on your desktop, and assume it is synced to your OneDrive in the normal manner, as mentioned above with the Known Folder Move feature.

# Create some duplicate files

$Desktop = [Environment]::GetFolderPath("Desktop")

$TestDir = mkdir $Desktop\DupesDirectory -Force

$TestLogFile = (Invoke-WebRequest "https://gist.githubusercontent.com/Mike-Crowley/d4275d6abd78ad8d19a6f1bcf9671ec4/raw/66fe537cfe8e58b1a5eb1c1336c4fdf6a9f05145/log.log.log").content

1..25 | ForEach-Object { $TestLogFile | Out-File "$TestDir\$(Get-Random).log" }

# Create some non-duplicate files

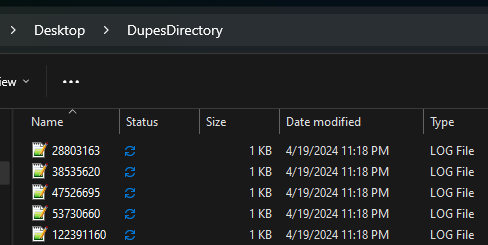

1..25 | ForEach-Object { Get-Random | Out-File "$TestDir\$(Get-Random).log" }You should now have a DupesDirectory folder. Half of the files are duplicates:

Wait for it to sync to OneDrive before moving on:

In this case, we could use the /me/drive uri, but since we’re building a tool for admins, not an app for end-users, we’ll specify the user in the request, even if that is “me” in today’s demo. This lets us use it for other users and incorporate foreach loops in the future.

(Invoke-MgGraphRequest -Uri 'v1.0/me/drive').value.webUrlvs

$Upn = "mike@mikecrowley.fake"

(Invoke-MgGraphRequest -Uri "v1.0/users/$Upn/drive").value.webUrl Also in this case, we know the folder path to check. It’s ...Documents/DupesDirectory. Let’s use that for now, but I’ll share some thoughts on running this across multiple folders or sites in a little bit. To list the new files, run the below:

Connect-MgGraph -NoWelcome

$Upn = "mike@mikecrowley.fake"

$Drive = Invoke-MgGraphRequest -Uri "beta/users/$($upn)/drive"

$DriveChildren = Invoke-MgGraphRequest -Uri "beta/drives/$($Drive.id)/root:/Desktop/DupesDirectory:/children"

$DriveChildren.value.nameThis should list your new files:

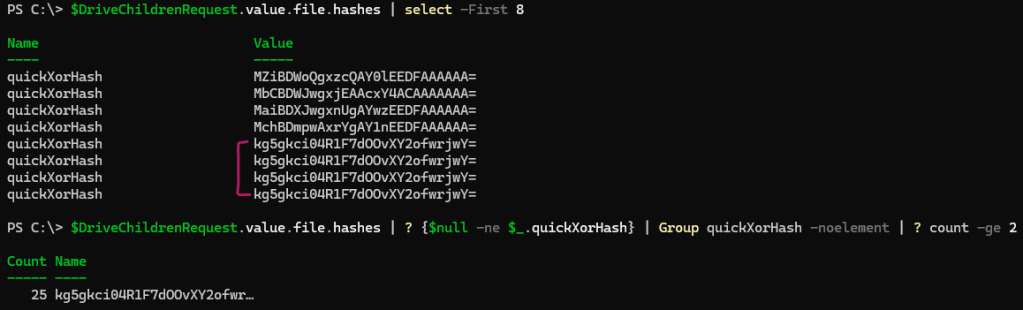

Notice how the files have an quickXorHash attribute:

Hmm, do you see what I see? Duplicates!

Note: Microsoft limits the output to 200 objects by default, which is fine for our test, but I’ve included code to loop through additional pages, should you have more files in the future.

# Add a couple of extra duplicate groups to make the report interesting:

1..25 | ForEach-Object { "Hello World 1" | Out-File "$TestDir\$(Get-Random).log" }

1..25 | ForEach-Object { "Hello World 2" | Out-File "$TestDir\$(Get-Random).log" }

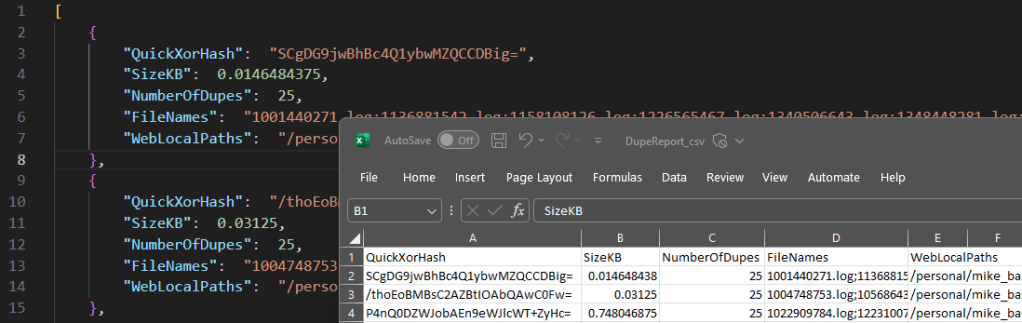

The script will place two files on your desktop. One in CSV the other in JSON, which I find to be an easier format for evaluating the duplicate file groups.

Here is the complete code:

<Code updated below>

Some thoughts on searching multiple folders, document libraries, or sites:

Want to search all files on your OneDrive, not just one level of the DupesDirectory folder? It will take more work. First, swap out this line:

$uri = "beta/drives/$($drive.id)/root:/Desktop/DupesDirectory:/children"With this instead:

$uri = "beta/users/$($upn)/drive/root/search(q='')" Be warned – it may take a long time. It also depends on Microsoft’s search index, which may be slower or incomplete, making testing in quick succession unreliable. I got tens of thousands of files returned for my OneDrive, so that felt substantial enough. Unfortunately, and here is the bummer, this does not populate the hashes property. That means you’d need to feed it back in to another call, getting the file explicitly. I will update this post if I find a better solution.

You may want to check for duplicate files across more than just a single drive. In this case, you’ll need to modify the script to include multiple queries (for each site), combining the results into a single array. Otherwise the logic would be the same.

My goal with this article was to introduce the quickXorHash property and show how it can be useful with a dedupe report. If you have more questions about navigating SharePoint or OneDrive through Graph, leave questions in the comments.

EDIT: 23 April 2024: Here is a draft of a better version. Once testing is complete, I will update this article to use this file, which handles recursion, page size and other features.

https://github.com/Mike-Crowley/Public-Scripts/blob/main/Find-DriveItemDuplicates.ps1